Introduction

In this blog, I will go through designing a distributed game server and the theory behind it.

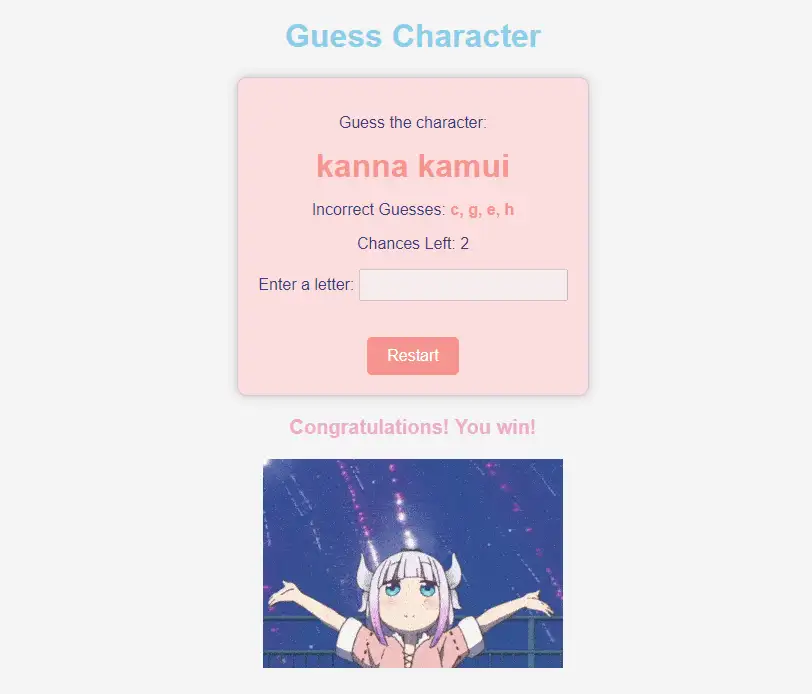

The game is Hangman with a Kanna theme. The game itself doesn’t make any sense to be distributed, this is just a project I made for fun to sharpen my skills in building a distributed system.

I will not show any code in this article but rather some theory and architecture, if you have questions feel free to contact me or read the source code at dhangkanna to see the implementation details.

Light Theory

Distributed systems are complex by nature and hard to get right, many things can and will go wrong. I will try to explain things in their simplest form. If you like to dive deeper, there are great books that address distributed systems, the ones that I’ve read are Designing Data-Intensive Applications , Understanding Distributed Systems and the one that this entire article was based on Distributed Services with Go .

I recommend that software engineers interested in distributed systems read them they are not theory-heavy, but they address necessary knowledge that any software engineer needs to know about distributed systems.

In this article, I will address only the things that I implemented in my project.

In distributed systems, the servers need to act as one big server and to achieve that the servers need to:

- Communicate with each other (Transportation protocol)

- know about each other (Discovery)

- Agree on the same state (Consensus)

I will address each one and explain what it is, so let’s start.

Transportation protocol

The most common ways of communicating between servers are REST and RPC, both can work just fine.

RPC is used when low latency is required because it uses binary as an encoding method for the data, while REST uses objects.

REST is usually better when the client is involved in the communication thanks to its human-readable form.

but in our system, the communication is between servers, so a better option is RPC

There is plenty of resources on REST vs RPC so no need to go into more details here.

I am using gRPC for RPC

Discovery

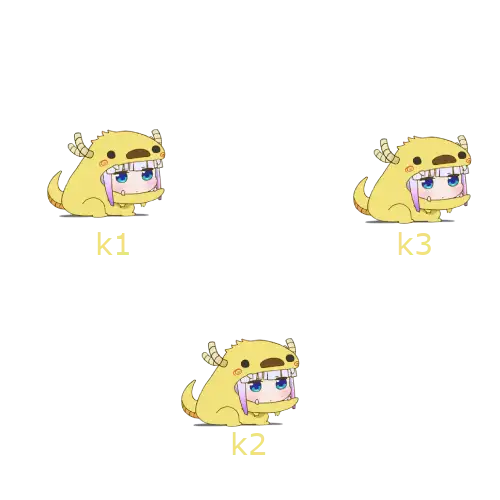

let’s take a look at this picture

each kanna represents a server, each server running in isolation from the other server so they don’t know anything about each other.

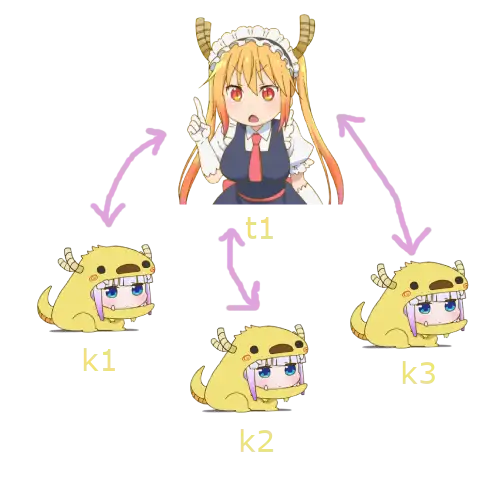

for the servers to know about each other someone has to manage the list of servers and their health.

In kafa the one who is responsible for that is zookeeper

tohru here is playing the role of zookeeper she will keep her eye on kannas and when a new kanna joins the cluster or shutdown/fails she will know about that.

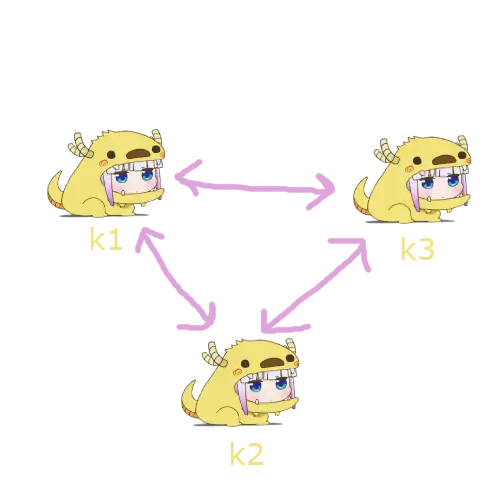

Another way is how redis does it is by using the gossip protocol. As the name says, in this protocol, each server will have information about other servers, and each server will communicate its list of servers with other servers, so in the end, the servers end up knowing about each other.

let’s take this example k1 knows about k2, k2 knows about k3 and k3 knows about none of bove. k1 and k2 will exchange their list of servers so now k1 and k2 know about k1,k2,k3 and k2 or k1 will exchange this list of members with k3 so know all the kanna’s know about each other.

In my app, I used hashicorp library called serf

. serf is the library that consul

uses internally for discovery.

serf will work by emitting events to the servers mainly:

- join

- leave

- failed

more about events details are listed in the documentation serf event handlers

when a server joins it will emit an event to the other servers (you need to pass the list of servers to emit to) then they will start to propagate this event to other servers. The same applies to all the other events.

Consensus

Okay, so now we have a way for servers to know about each other. But yet they are not organized nor share the same state.

To have the same state across servers we need an algorithm that will make sure that all machines are in fact in the same state.

There are a couple of algorithms like Paxos and Raft, I didn’t learn about Paxos but I’ve read that it’s too complex for developers to implement.

So we are going to use Raft algorithm, luckily Hashicorp has its own implementation in Go hashicorp/raft

The servers need to agree on the same state, so we need a server that will act as a leader.

The leader’s role is to keep all the followers in sync, which means every change that happens to a leader will be synced with all the followers.

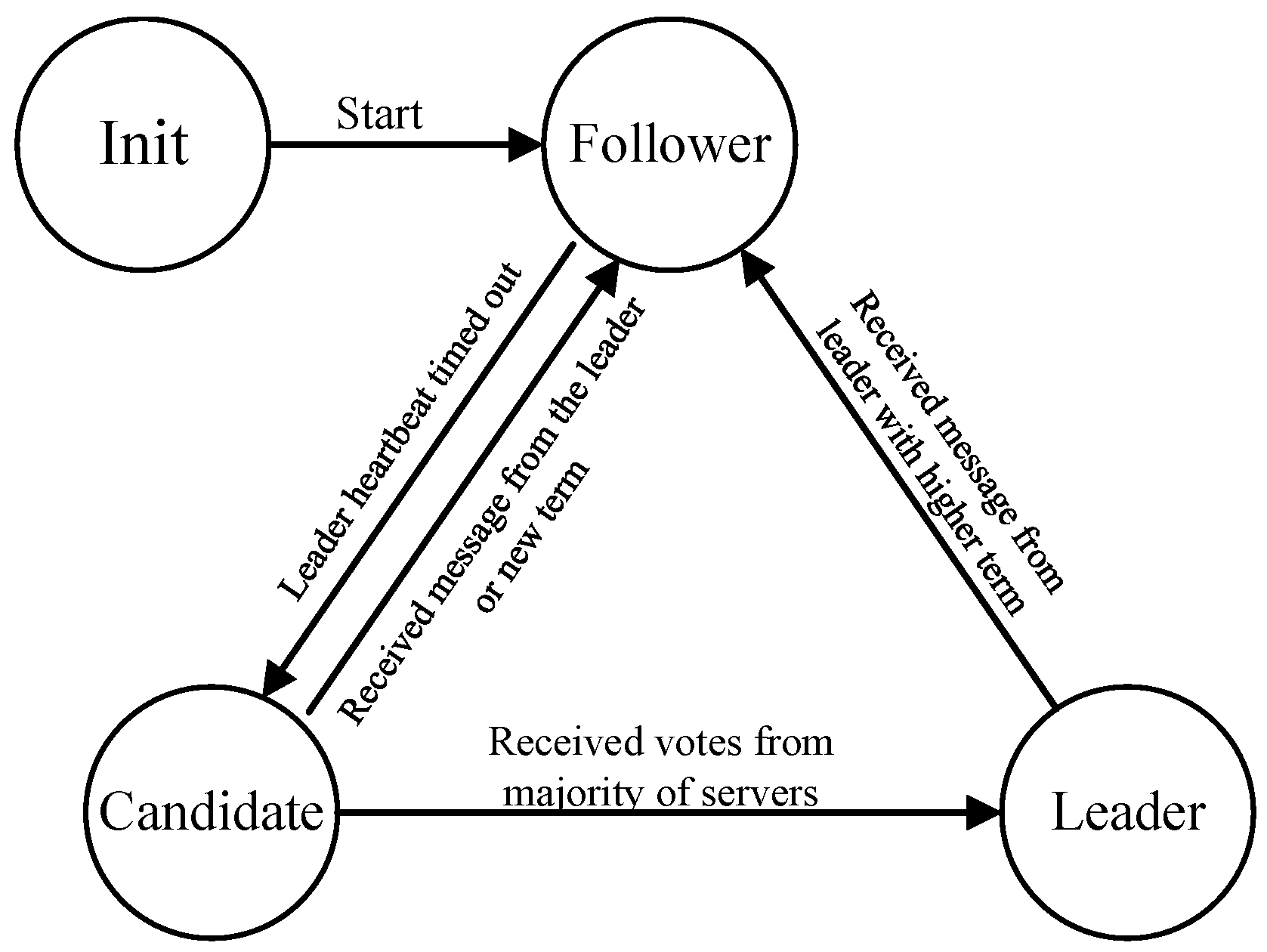

This state machine represents the states of a server.

When a server joins the cluster it will enter the follower state, when the heartbeat with the leader timeout it will enter the candidate state. If the heartbeat with the leader succeeds or a new term is received then it will return to the follower state. If the candidate receives the majority of votes it will be elected as the leader.

Another thing worth noting here is the number of servers is odd, and that’s to achieve the quorum.

quorum is the minimum number of votes a server needs to have to move from the candidate to the leader state and it’s usually where is the quorum and is the number of servers in the cluster.

it has to be an odd number to avoid a split-brain issue because when the number is odd there is a probability that two servers will get the same number of votes and we can’t have more than one leader.

Architecture

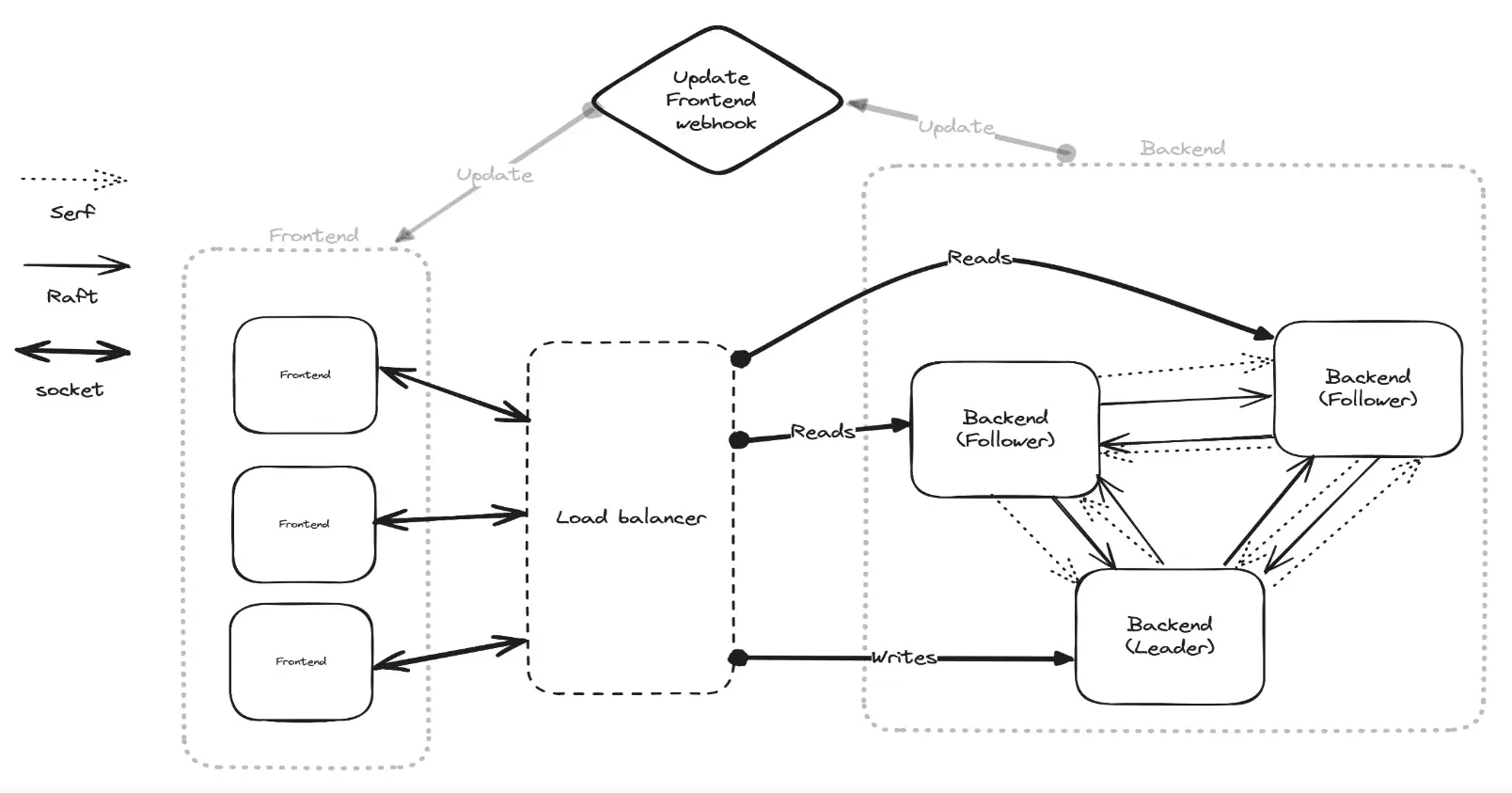

In my project I am serving the frontend from a Go server, don’t get confused between the backend which holds the state of the game and the frontend, the frontend server itself doesn’t have to do anything with the state of the game it just maintain a socket connection to a backend node.

When a player enters a character the gRPC load balancer will redirect the call to the leader, after that the leader will copy the state to all of the followers, then the webhook will update the frontend for other pages. All the frontend updates and initialization will be handled by followers.

I will end the article with a gameplay demo